Shunchi Zhang

Hi, I am Shunchi Zhang (张顺驰), a Master's student in Computer Science at Johns Hopkins University (JHU).

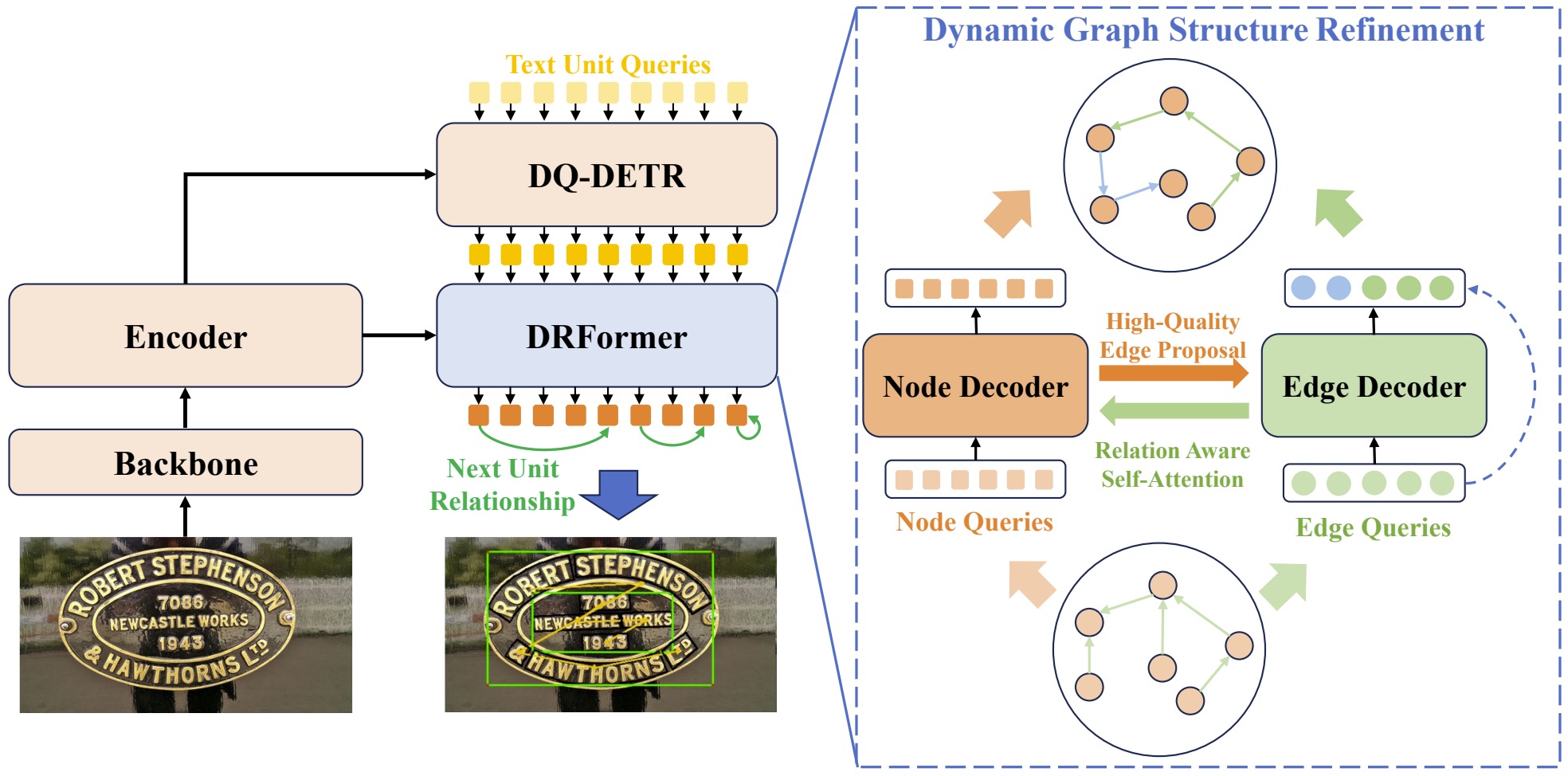

Previously, I interned at WeChat AI @ Tencent mentored by Mo Yu and Lemao Liu, and Microsoft Research Asia (MSRA) mentored by Zhuoyao Zhong and Lei Sun.

I received my Bachelor's degree in Quantitative Economics from Xi'an Jiaotong University (XJTU) in 2024, and had a great time at University of Wisconsin-Madison (UW Madison) as a visiting student in Mathematics during Spring 2022.